Creating a Raspberry 3 B+ Kubernetes Cluster

Goal

Also GCE is perfect to learn Kubernetes, building Kubernetes on top of PI Cluster brings another dimension to the learning, from setting up the OS, partitionning the OS, DHCP, NAT, cross compiling for the ARM32V7.

Key Aspects

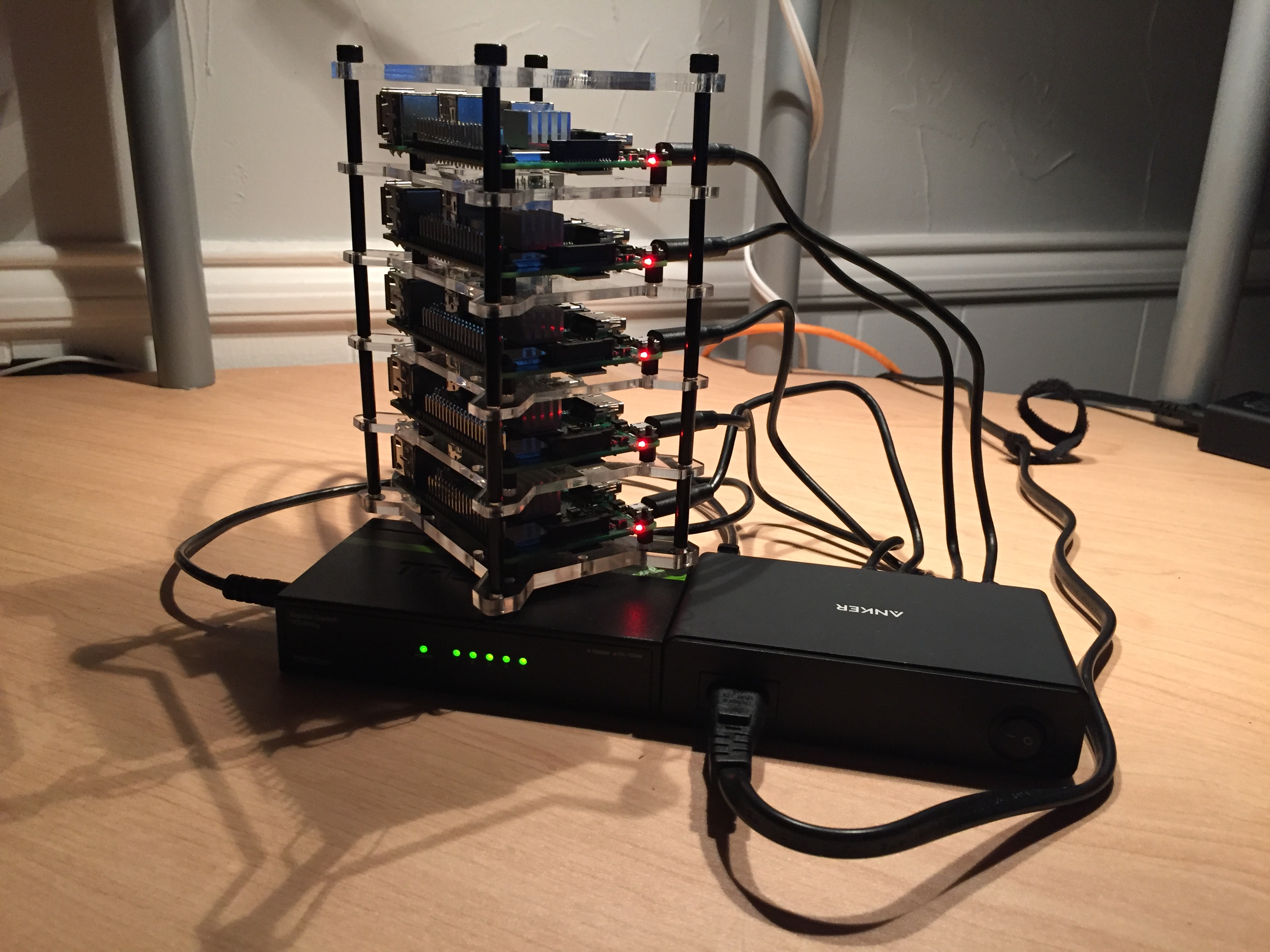

- Build a Raspberry 3B+ Cluster

- Deploy Kubernetes on that Cluster

Hardware

Reference Links

Procedure

- Kind of followed the video

- Used a premade rack instead.

- Adapt to Raspberry 3B+ (1Gb card instead of 100Mb card)

Result

Cluster 1: 5 nodes cluster

Cluster 2: 3 nodes cluster

OS

Reference Links

Procedure

- Use HypriotOS because the quickest to set up. SSH and Docker supported by default

- Ubuntu Core was not ready for Raspberry 3B +

- Even if processor is 64bits, OS is still 32bits. (Memory is small anyway).

- Resion.io OS is not supporting docker anymore but balena instead. It would not work with Kubernetes.

- Video had a couple of typo in DHCP installation

- Removed cloud-init once the site was up.

- TBD

Kubernetes

Reference Links

Procedure for Cluster 2

- Issue at first with 10.04. Kubelet not starting. Downgraded with Kubernetes 1.9.8 (Cluster 1)

- Issue had been fixed with Kubernetes 1.11 (Cluster 2)

sudo -i

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

echo "deb http://apt.kubernetes.io/ kubernetes-xenial main" > /etc/apt/sources.list.d/kubernetes.list

apt-get update && apt-get install -y kubeadm

# kubeadm init --pod-network-cidr 10.244.0.0/16 --apiserver-advertise-address 192.168.1.95

I0706 00:35:34.429323 14024 feature_gate.go:230] feature gates: &{map[]}

[init] using Kubernetes version: v1.11.0

[preflight] running pre-flight checks

I0706 00:35:34.656171 14024 kernel_validator.go:81] Validating kernel version

I0706 00:35:34.657051 14024 kernel_validator.go:96] Validating kernel config

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 18.05.0-ce. Max validated version: 17.03

[preflight/images] Pulling images required for setting up a Kubernetes cluster

[preflight/images] This might take a minute or two, depending on the speed of your internet connection

[preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[preflight] Activating the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [master-pi kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.1.95]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Generated etcd/ca certificate and key.

[certificates] Generated etcd/server certificate and key.

[certificates] etcd/server serving cert is signed for DNS names [master-pi localhost] and IPs [127.0.0.1 ::1]

[certificates] Generated etcd/peer certificate and key.

[certificates] etcd/peer serving cert is signed for DNS names [master-pi localhost] and IPs [192.168.1.95 127.0.0.1 ::1]

[certificates] Generated etcd/healthcheck-client certificate and key.

[certificates] Generated apiserver-etcd-client certificate and key.

[certificates] valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests"

[init] this might take a minute or longer if the control plane images have to be pulled

[apiclient] All control plane components are healthy after 77.506994 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.11" in namespace kube-system with the configuration for the kubelets in the cluster

[markmaster] Marking the node master-pi as master by adding the label "node-role.kubernetes.io/master=''"

[markmaster] Marking the node master-pi as master by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "master-pi" as an annotation

[bootstraptoken] using token: 120d6b.yyyyyyy

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.1.95:6443 --token 120d6b.yyyyyyy --discovery-token-ca-cert-hash sha256:xxxxxx

on master

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get nodes

curl -sSL https://rawgit.com/coreos/flannel/v0.9.1/Documentation/kube-flannel.yml | sed "s/amd64/arm/g" | kubectl create -f -

on first node

kubeadm join 192.168.1.95:6443 --token 120d6b.yyyyyyy --discovery-token-ca-cert-hash sha256:xxxxxx

on second node

kubeadm join 192.168.1.95:6443 --token 120d6b.yyyyyyy --discovery-token-ca-cert-hash sha256:xxxxxx

Kubernetes

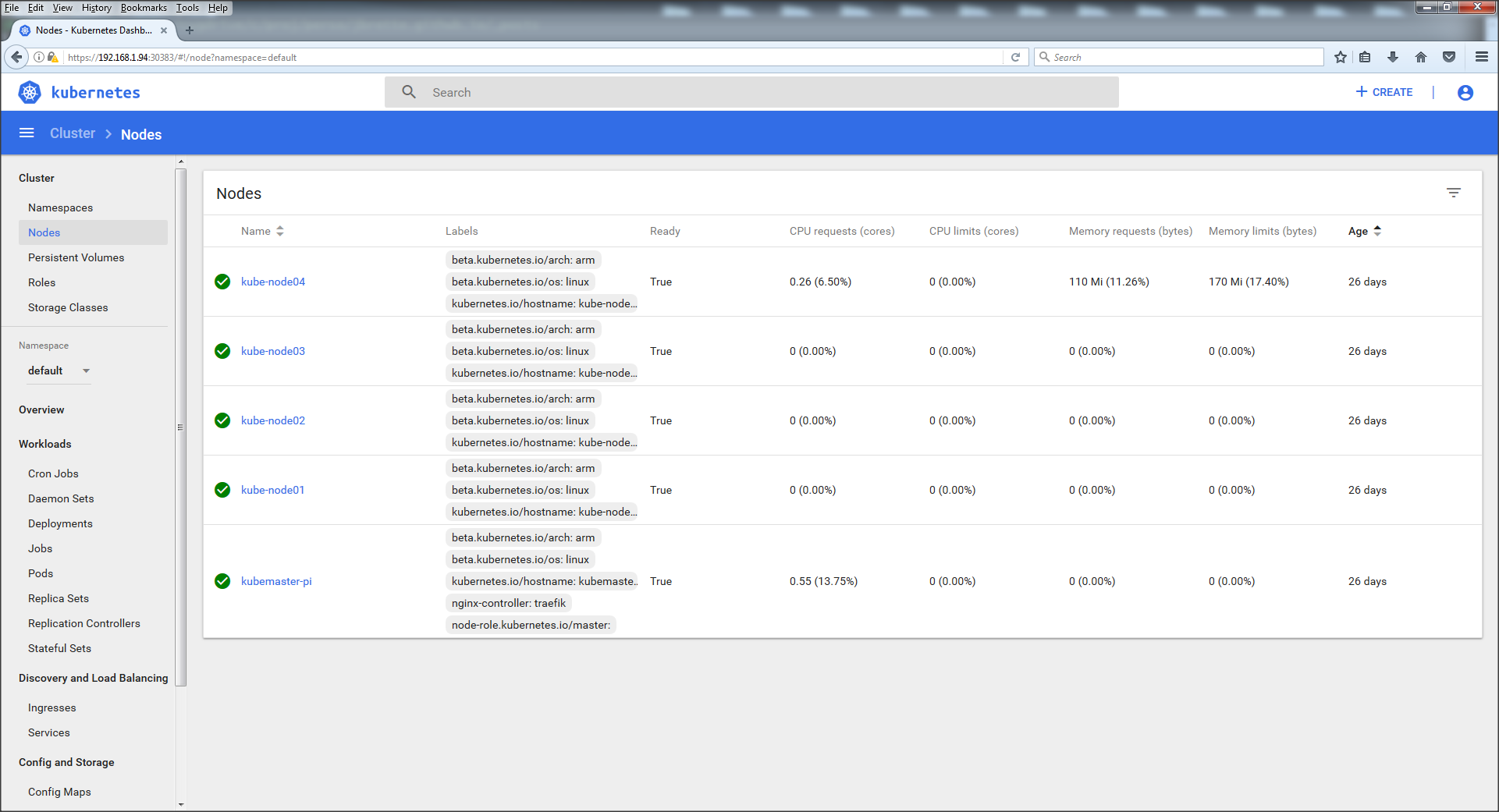

Cluster 1: 5 node clusters

kubectl get nodes

NAME STATUS ROLES AGE VERSION

kube-node01 Ready <none> 23d v1.9.8

kube-node02 Ready <none> 23d v1.9.8

kube-node03 Ready <none> 23d v1.9.8

kube-node04 Ready <none> 23d v1.9.8

kubemaster-pi Ready master 23d v1.9.8

kubectl get all --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default pod/hypriot-587768b4f5-7bqpz 1/1 Running 0 23d

default pod/hypriot-587768b4f5-b8xjq 1/1 Running 0 23d

default pod/hypriot-587768b4f5-s2mzt 1/1 Running 0 23d

kube-system pod/etcd-kubemaster-pi 1/1 Running 0 23d

kube-system pod/kube-apiserver-kubemaster-pi 1/1 Running 0 23d

kube-system pod/kube-controller-manager-kubemaster-pi 1/1 Running 0 23d

kube-system pod/kube-dns-7b6ff86f69-l7lf6 3/3 Running 0 23d

kube-system pod/kube-flannel-ds-8xbx4 1/1 Running 0 23d

kube-system pod/kube-flannel-ds-9cz9f 1/1 Running 0 23d

kube-system pod/kube-flannel-ds-rgpcq 1/1 Running 0 23d

kube-system pod/kube-flannel-ds-xnjtz 1/1 Running 0 23d

kube-system pod/kube-flannel-ds-xxdf6 1/1 Running 0 23d

kube-system pod/kube-proxy-5m95q 1/1 Running 0 23d

kube-system pod/kube-proxy-7sh7m 1/1 Running 0 23d

kube-system pod/kube-proxy-f7t9r 1/1 Running 0 23d

kube-system pod/kube-proxy-pkqvd 1/1 Running 0 23d

kube-system pod/kube-proxy-shrdr 1/1 Running 0 23d

kube-system pod/kube-scheduler-kubemaster-pi 1/1 Running 0 23d

kube-system pod/kubernetes-dashboard-7fcc5cb979-8vbmp 1/1 Running 0 23d

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/hypriot ClusterIP 10.110.24.241 <none> 80/TCP 23d

default service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23d

kube-system service/kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 23d

kube-system service/kubernetes-dashboard NodePort 10.102.144.189 <none> 443:30383/TCP 23d

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.extensions/kube-flannel-ds 5 5 5 5 5 beta.kubernetes.io/arch=arm 23d

kube-system daemonset.extensions/kube-proxy 5 5 5 5 5 <none> 23d

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

default deployment.extensions/hypriot 3 3 3 3 23d

kube-system deployment.extensions/kube-dns 1 1 1 1 23d

kube-system deployment.extensions/kubernetes-dashboard 1 1 1 1 23d

NAMESPACE NAME DESIRED CURRENT READY AGE

default replicaset.extensions/hypriot-587768b4f5 3 3 3 23d

kube-system replicaset.extensions/kube-dns-7b6ff86f69 1 1 1 23d

kube-system replicaset.extensions/kubernetes-dashboard-7fcc5cb979 1 1 1 23d

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/kube-flannel-ds 5 5 5 5 5 beta.kubernetes.io/arch=arm 23d

kube-system daemonset.apps/kube-proxy 5 5 5 5 5 <none> 23d

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

default deployment.apps/hypriot 3 3 3 3 23d

kube-system deployment.apps/kube-dns 1 1 1 1 23d

kube-system deployment.apps/kubernetes-dashboard 1 1 1 1 23d

NAMESPACE NAME DESIRED CURRENT READY AGE

default replicaset.apps/hypriot-587768b4f5 3 3 3 23d

kube-system replicaset.apps/kube-dns-7b6ff86f69 1 1 1 23d

kube-system replicaset.apps/kubernetes-dashboard-7fcc5cb979 1 1 1 23d

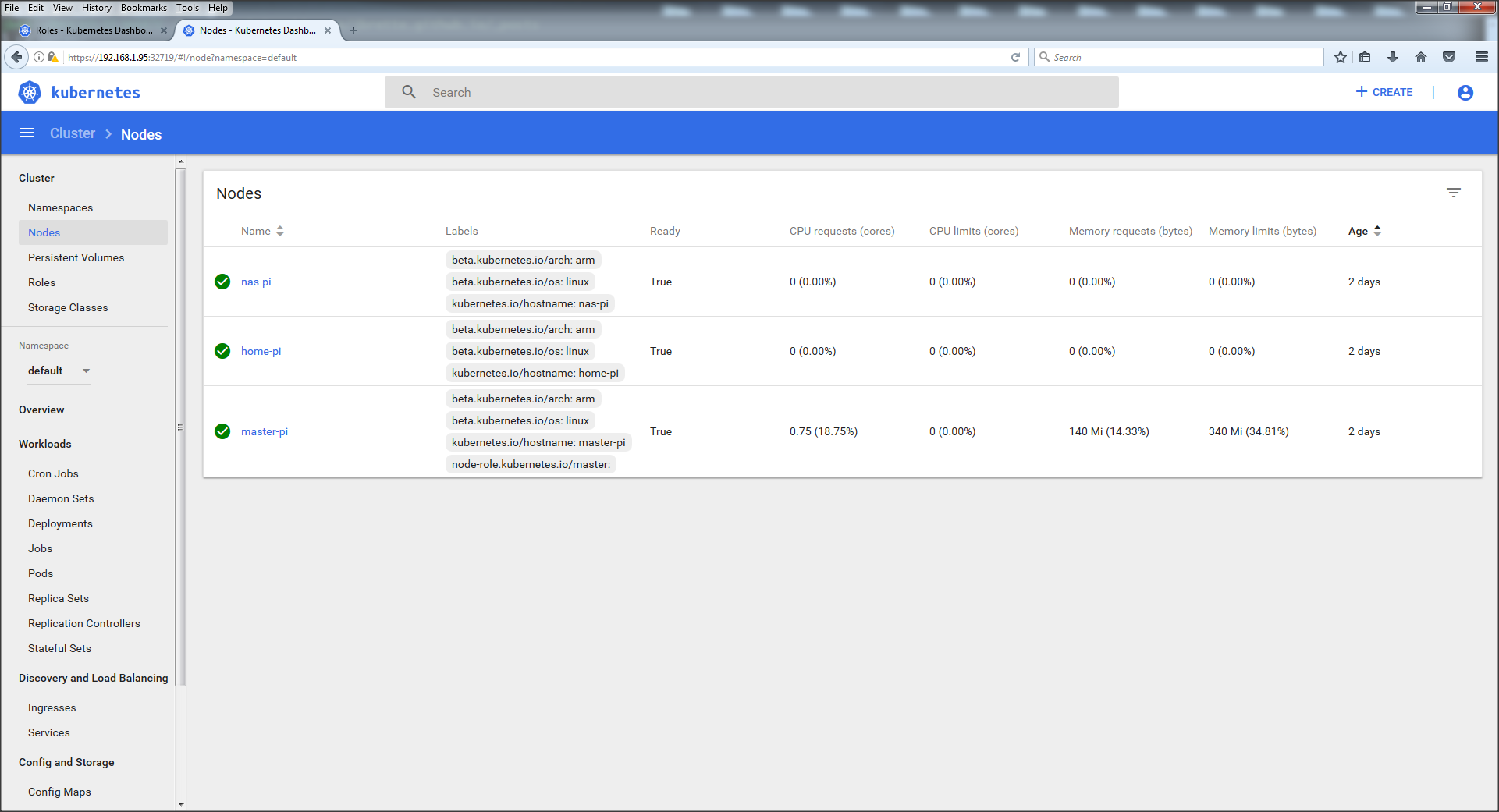

Cluster 2: 3 node clusters

kubectl get nodes

NAME STATUS ROLES AGE VERSION

home-pi Ready <none> 56m v1.11.0

master-pi Ready master 1h v1.11.0

nas-pi Ready <none> 5m v1.11.0

kubectl get all --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/coredns-78fcdf6894-cw5p8 1/1 Running 0 59m

kube-system pod/coredns-78fcdf6894-czjcj 1/1 Running 0 1h

kube-system pod/etcd-master-pi 1/1 Running 0 1h

kube-system pod/kube-apiserver-master-pi 1/1 Running 0 59m

kube-system pod/kube-controller-manager-master-pi 1/1 Running 12 1h

kube-system pod/kube-flannel-ds-bhllh 1/1 Running 2 3m

kube-system pod/kube-flannel-ds-q7cp2 1/1 Running 0 22m

kube-system pod/kube-flannel-ds-wqxsz 1/1 Running 0 22m

kube-system pod/kube-proxy-4chwh 1/1 Running 0 45m

kube-system pod/kube-proxy-6r5mn 1/1 Running 0 3m

kube-system pod/kube-proxy-vvj6j 1/1 Running 0 1h

kube-system pod/kube-scheduler-master-pi 1/1 Running 0 59m

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 1h

kube-system service/kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 1h

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/kube-flannel-ds 3 3 3 3 3 beta.kubernetes.io/arch=arm 53m

kube-system daemonset.apps/kube-proxy 3 3 3 3 3 beta.kubernetes.io/arch=arm 1h

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

kube-system deployment.apps/coredns 2 2 2 2 1h

NAMESPACE NAME DESIRED CURRENT READY AGE

kube-system replicaset.apps/coredns-78fcdf6894 2 2 2 1h

Kubernetes Dashboard

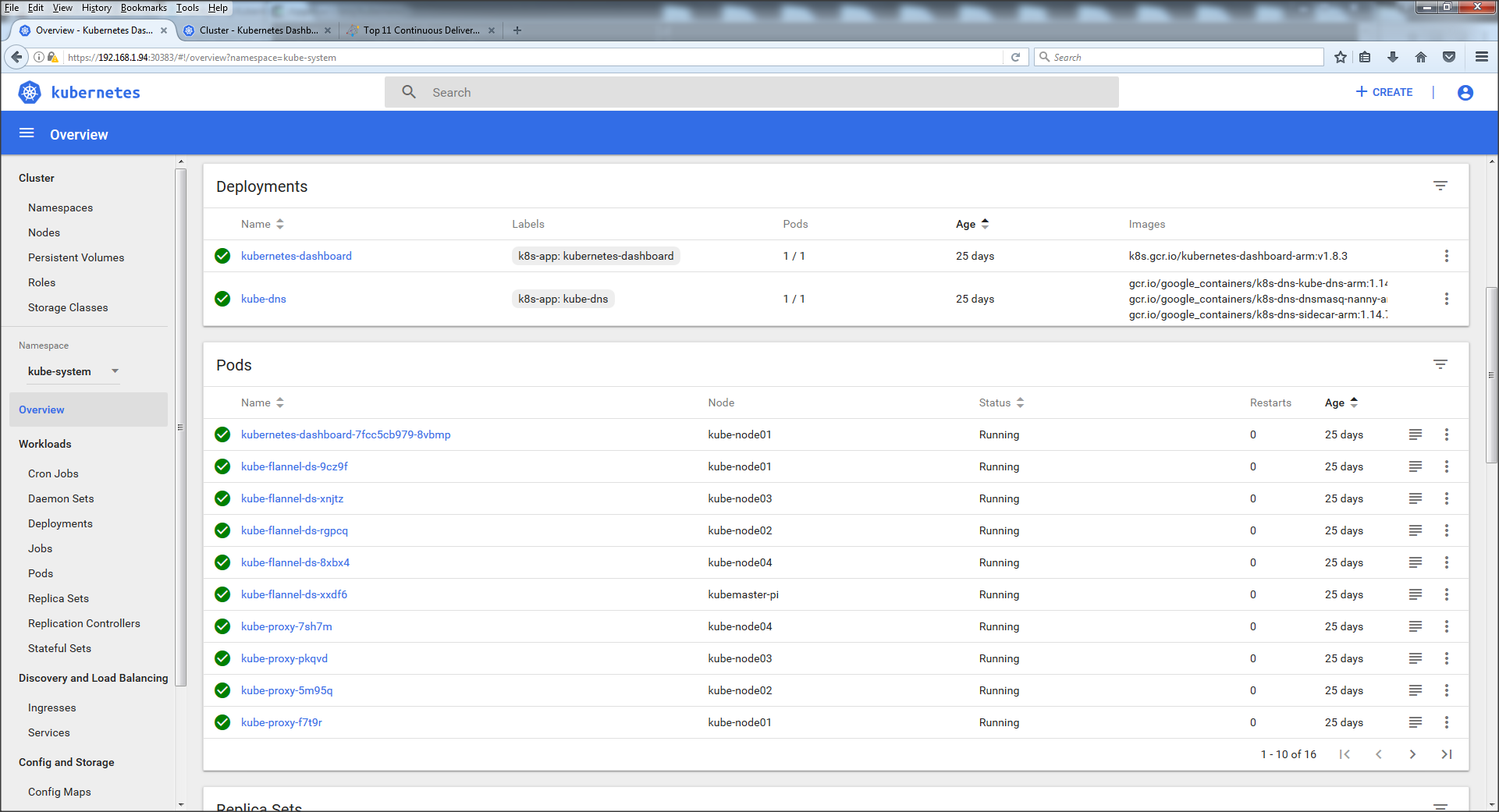

Cluster 1: 5 node clusters

List of the nodes in Cluster 1

Kubernetes kube-system overview of Cluster 1

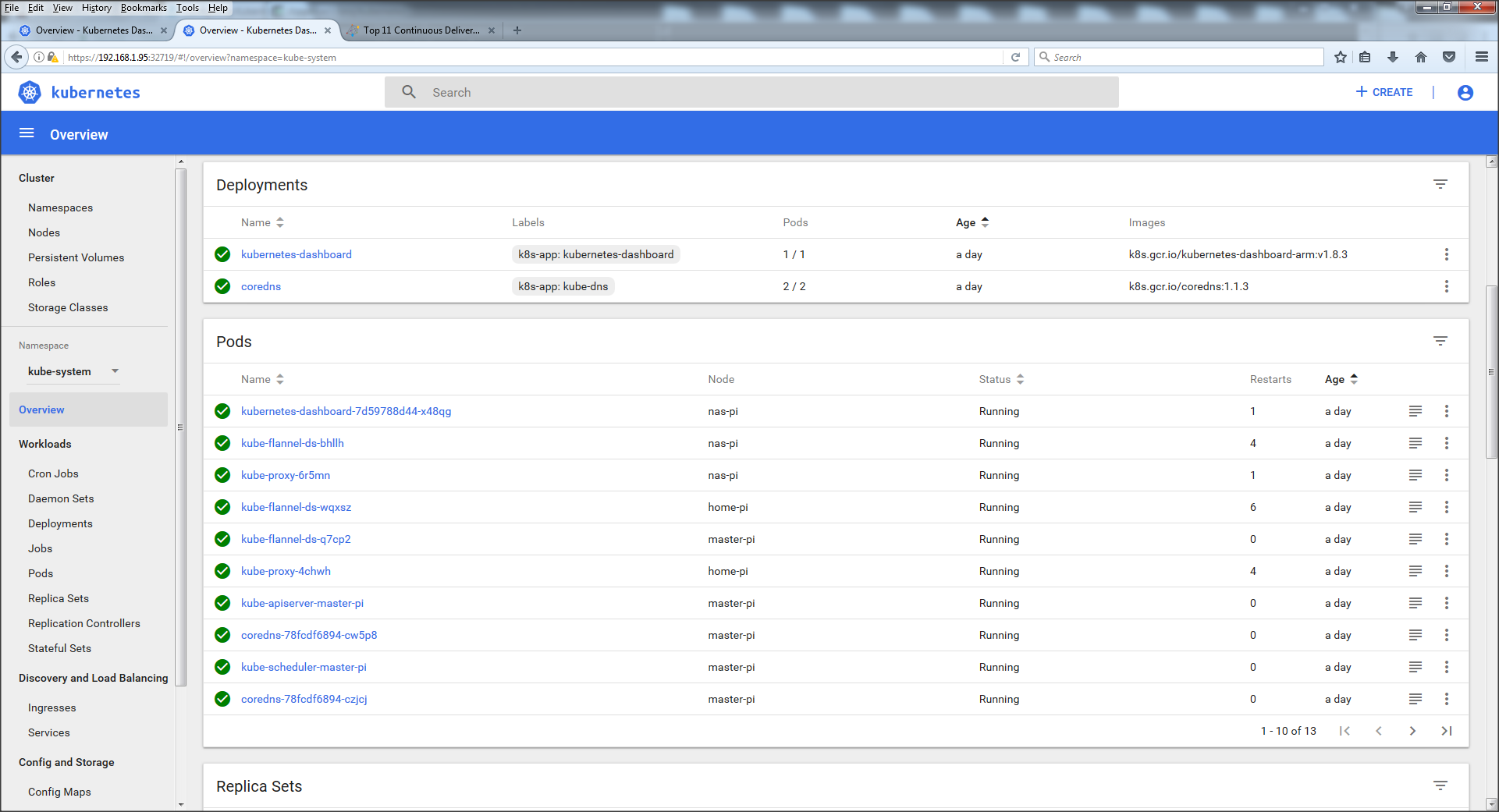

Cluster 2: 3 node clusters

List of the nodes in Cluster 2

Kubernetes kube-system overview of Cluster 2

Cleanup

cleanup

sudo kubeadm reset

sudo docker rm $(sudo docker ps -qa)

sudo docker image rm $(sudo docker image list -qa)

sudo apt-get upgrade